Have you ever wondered how your camera captures the vibrant reds of a sunset or the soft pastels of a flower? It’s not magic—it’s science and engineering.

In this article, we’ll explore how cameras see colours, breaking down the process from light entering the lens to the final colourful image displayed on your screen.

Understanding Light and Colour

To understand how cameras see colours, we first need to understand light. Light is made up of electromagnetic waves, and visible light—the part of the spectrum we can see—consists of different wavelengths corresponding to different colours.

- Short wavelengths = Blue/violet hues

- Medium wavelengths = Green hues

- Long wavelengths = Red hues

Every object reflects, absorbs, or transmits light in different ways, and this reflected light is what a camera (or your eyes) perceives as colour.

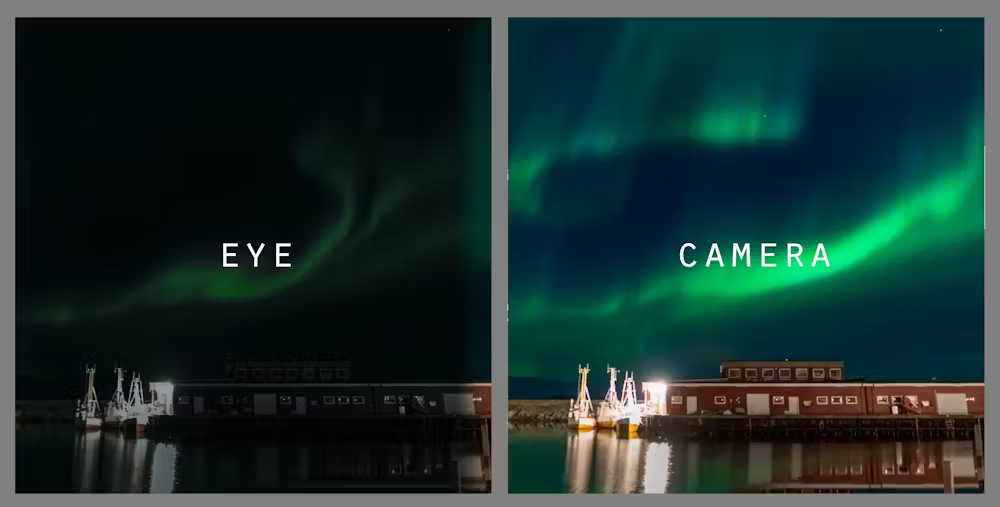

How Human Eyes Perceive Colour

Human eyes have three types of cone cells, each sensitive to red, green, or blue light. These cells work together to help us interpret a wide range of colours.

Cameras are designed to mimic this RGB colour model using sensor technology. While they don’t replicate the complexity of human vision, they come surprisingly close through clever engineering.

The Science Behind Camera Sensors

Modern digital cameras use sensors like CMOS (Complementary Metal-Oxide Semiconductor) or CCD (Charge-Coupled Device) to detect light. However, these sensors are monochrome by default—they can only measure brightness, not colour.

So, how do they see colour?

That’s where the colour filter array comes in.

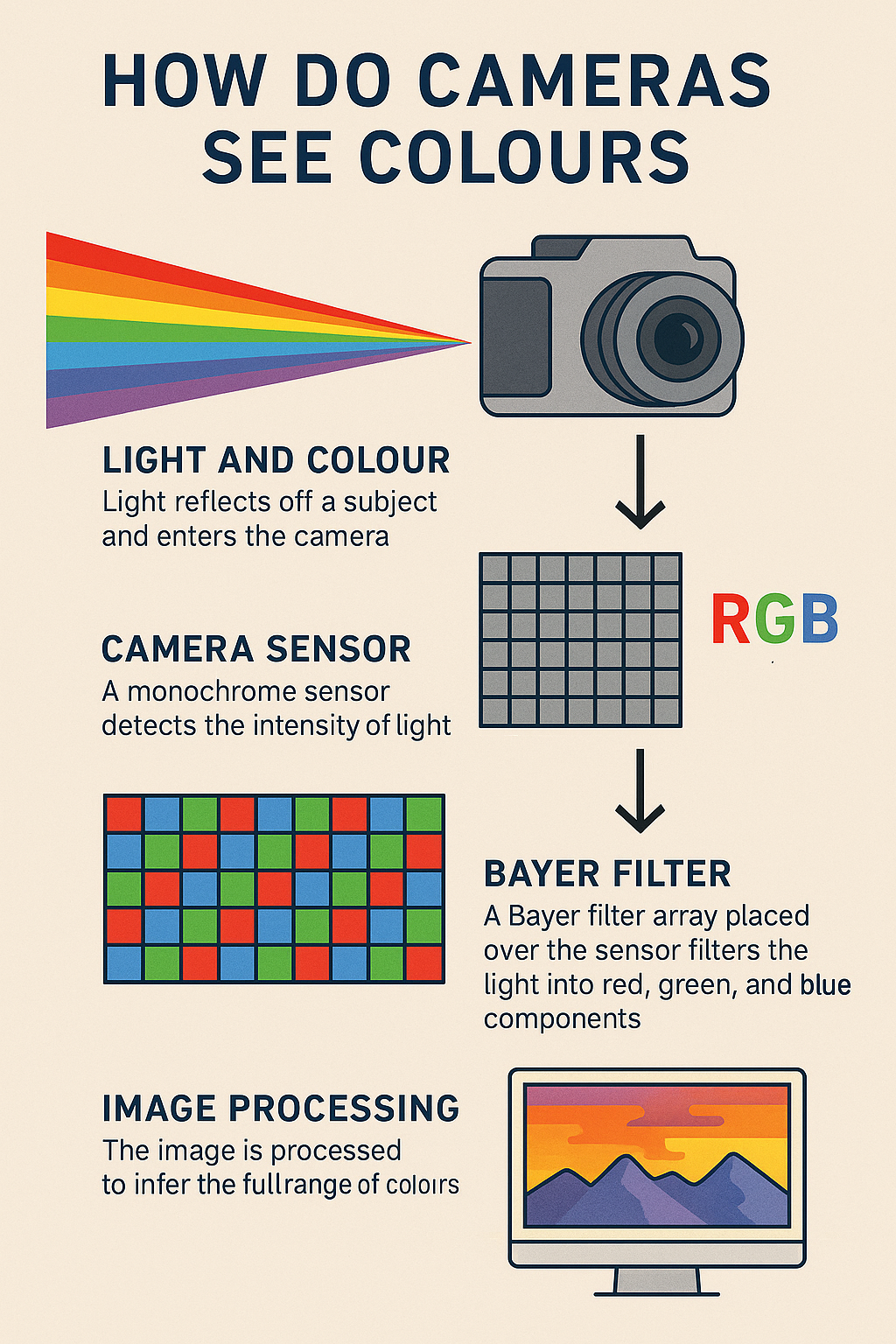

The Role of the Bayer Filter

To capture colour information, most digital cameras use a Bayer filter array. This is a grid of tiny red, green, and blue filters placed over the pixels of the sensor.

A standard Bayer pattern consists of:

- 50% Green (to mimic human eye sensitivity)

- 25% Red

- 25% Blue

Each pixel captures just one colour. The camera’s processor then uses a process called demosaicing to reconstruct a full-colour image by interpolating the missing colours for each pixel.

Image Processing and Colour Interpretation

After capturing light data through the sensor and Bayer filter, the camera applies a series of algorithms to produce a final image. This includes:

- Demosaicing

- White balancing

- Colour correction

- Sharpening and noise reduction

These steps transform raw sensor data into a visually pleasing, full-colour image.

White Balance and Colour Accuracy

White balance is crucial in how a camera interprets colours. Different light sources (sunlight, tungsten, fluorescent) emit different colour temperatures.

For example:

- Daylight = ~5500K (neutral white)

- Tungsten = ~3200K (warm yellow)

- Shade = ~7000K (cool blue)

Cameras use auto white balance (AWB) or manual settings to compensate for these differences, aiming to make whites appear truly white and other colours accurate by comparison.

RAW vs JPEG: Impact on Colours

The file format also affects colour perception:

- RAW files retain all the sensor data, allowing for extensive post-processing and accurate colour correction.

- JPEG files are compressed and processed by the camera, often boosting contrast and saturation, but losing some flexibility in colour correction.

Photographers who prioritize colour accuracy typically shoot in RAW format.

Why Colours Look Different on Different Devices

Ever noticed that a photo looks different on your phone versus your laptop? That’s due to:

- Screen calibration

- Colour profiles (sRGB, Adobe RGB, etc.)

- Display technology (LCD, OLED, etc.)

Different devices interpret and display colour values differently unless they’re properly calibrated for colour accuracy.

Tips for Capturing True-to-Life Colours

Here are a few tips to help your camera capture colours as accurately as possible:

- Use a grey card to set custom white balance.

- Shoot in RAW for greater post-processing control.

- Use calibrated monitors when editing photos.

- Avoid mixed lighting conditions (e.g., combining daylight and incandescent light).

- Understand your camera’s colour profile and adjust settings accordingly.

Conclusion

So, how do cameras see colours? Through a fascinating combination of light physics, sensor technology, colour filters, and software processing. While they don’t see colours exactly as our eyes do, modern cameras are incredibly advanced and capable of capturing the world in stunning, lifelike detail.

Understanding this process not only helps photographers get better shots but also deepens our appreciation for the complex technology behind every click of the shutter.